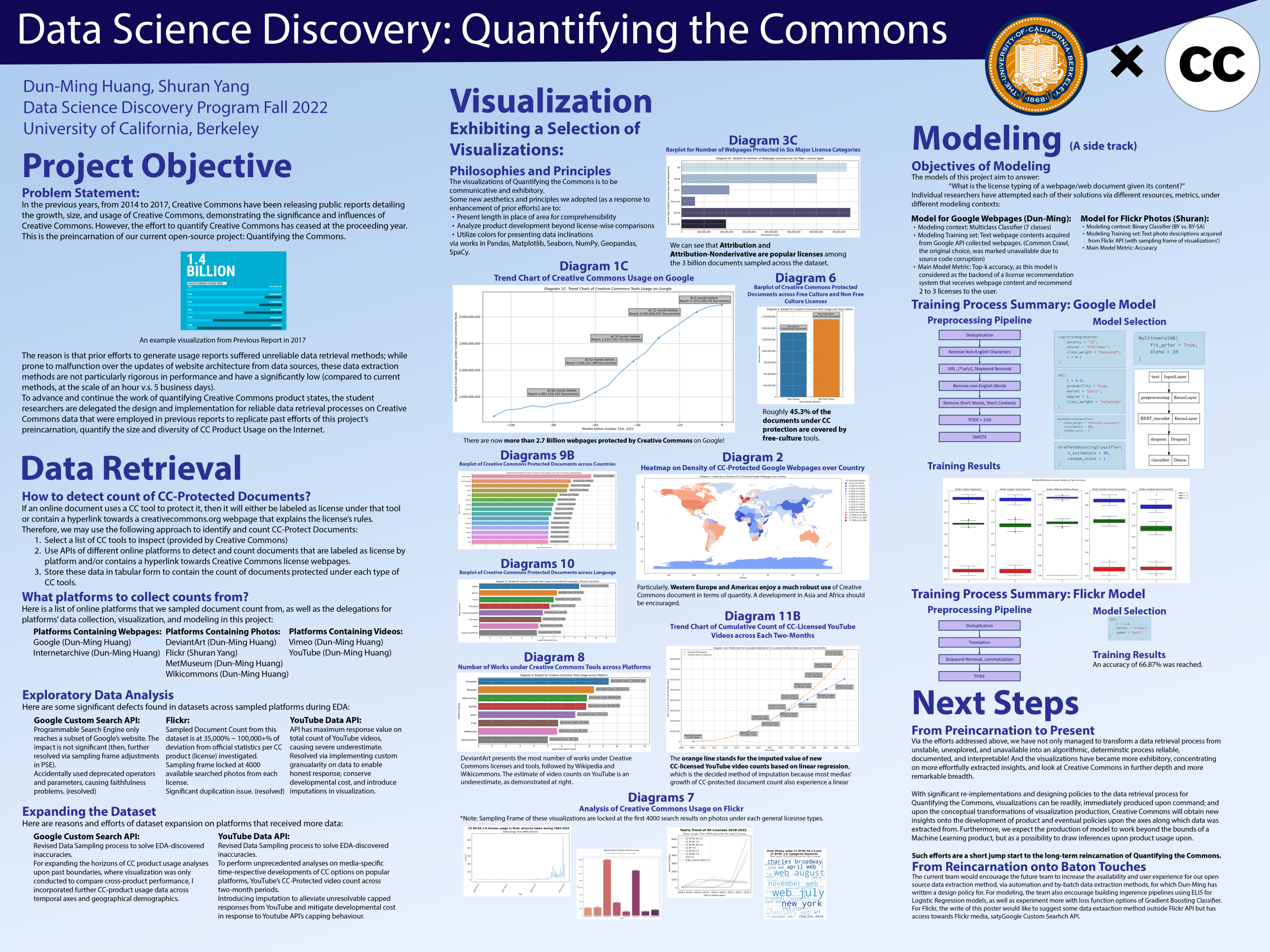

Creative Commons (CC) seeks to quantify the use of CC legal tools (works in the commons). CC legal tools include the licenses (e.g. CC BY, CC BY-NC-SA) and public declarations (e.g. CC0, PDM). This project would include data collection, analysis, and visualization.

First, this project should create reproducible processes or methodologies for creating a dataset of information about works that are CC licensed or dedicated to the public domain. The dataset may be built from platform APIs (e.g. Flickr), Common Crawl data, etc. The project should create a starting place not only for the project itself, but future efforts to extend the dataset and the meaning derived from it.

Second, the project should begin to create meaning from the dataset. How many works are currently in the commons? How has that changed/trended? How can those works be characterized (e.g. by legal tool, region, language)? How can the data be managed to allow future trend analysis (e.g. which languages saw the largest growth in legal tool adoption)?

Third, optionally, how can the data be visualized to communicate meaning and allow exploration?